What is Kubernetes Used For? A Comprehensive Guide for Beginners

What Is Kubernetes Used For in Simple Terms? At its core, Kubernetes is used for managing, scaling, and automating the deployment of containerized applications. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).People in tech tend to shorten Kubernetes to K8s, a name that rolls off the tongue once you hear it a couple of times. The system itself is open-source and was built to take the grunt work out of deploying, scaling, and managing applications that are bundled up in containers.

If you’re wondering what Kubernetes is used for, it’s designed to automate deployment, scaling, and management of containerized applications. What started as a Google side project is now shepherded by the Cloud Native Computing Foundation and, for better or worse, has pretty much become the default playbook for anyone working in cloud-native software.

A typical K8s setup looks a bit like a digital kaleidoscope, where hundreds of containers dart around a shared cluster of machines. Those boxes could be dusty old rack servers in a basement, shiny VMs floating above a public cloud, or some mix of both, depending on what the payroll can stand that week.

The real magic-or maybe the real relief-is that K8s hides most of the wiring and plumbing. You simply tell it how many replicas of a service you need or which pods can get the latest code first, then the system loops forever until reality matches the picture you painted in YAML. That continuous push toward your desired state lets developers and operators worry a little less about which virtual machine just lag.

Kubernetes pods are like little shipping crates, each carrying one or more containers, while nodes act as the delivery trucks that move those crates around. Overhead, a centralized control plane keeps an eye on everything, deciding where to park each pod, nudging misbehaving services back to health, and cranking replicas up or down as traffic dances around.

By letting operators describe an ideal state in a plain YAML file and trusting a set of robust APIs to reach that state, Kubernetes trims the ongoing tedium of manual chores, boosts consistency across dev and production boxes, and generally makes releases less nerve-wracking. The learning curve can feel like climbing a twisty staircase, yet once engineers crest that slope they find a tidy, flexible, and massively scalable toolkit that slots into just about every serious DevOps pipeline and cloud-native project out there.

Why Everyone Is Talking About Kubernetes

Kubernetes has been coming up in conversations, and for a good reason, as it actually helps with the day-to-day functions. What is Kubernetes used for? It’s what takes care of container orchestration so that you don’t have to. The moment you own a cluster, you stop babysitting containers. Instead, you sketch out a target state, like saying “three replicas of the API plus a storage class” and let Kubernetes take care of the details on all the machines it can access.

1. Automating Deployments Across Environments

One of the answers to the question, what is Kubernetes used for, is how it simplifies deployment automation. With Kubernetes, engineers create YAML files to outline how applications should run, and Kubernetes will make it happen automatically.

- Need three replicas of a backend service? Kubernetes spins them up.

- One dies? It’s replaced instantly.

- Scaling needed during peak hours? Kubernetes adjusts on demand.

2. Keeping Applications Resilient and Self-Healing

Another reason why teams keep asking what is Kubernetes used for is due to self-healing functions. Kubernetes ensures the app stays healthy by:

- Replacing containers that fail

- Reassigning tasks within healthy nodes

- Rolling updates with no downtime

- In environments where uptime is essential, having this kind of hands-off approach makes a world of difference.

3. Simplifying Multi-Service Architectures

When looking at complicated systems comprising dozens of microservices, developers often ask themselves: What is Kubernetes used for in this service soup?

The answer is: it removes some of the complex coordination burdens. Kubernetes assists in service discovery, enabling secure communication, independent scaling, and monitoring from a central control plane.

Which means:

- APIs, databases, frontends—all orchestrated automatically.

- Secure secrets management.

- Traffic routing and ingress control without manual hacks.

4. Keeping Dev, Staging, and Production Aligned and Consistent

A key example for “What is Kubernetes used for?” would be maintaining consistent environments. Developers can run the same exact configurations for Kubernetes on:

- Their local computer

- A pre-production or staging area

- The production clusters hosted on cloud services

- This reduces the frequency of encountering the “it works on my machine” problem and speeds up testing and CI/CD workflows.

5. Handling Traffic Surges

- If you were running an e-commerce store, for example, with traffic surges on black Friday. What is Kubernetes used for in this stress filled scenario?

- It detects the increase in load and automatically scales services horizontally by launching more replicas. When traffic scales down, it reduces the replicas, thus optimizing infrastructure costs.

- Such elasticity was previously an overly simplified complex set of manual scripts. Now, it is part of the standard platform features.

6. Portability Across Clouds and Hybrid Deployments

What is Kubernetes not good for besides scaling and deployments? This is your freedom from the clouds.

Kubernetes can be executed:

- On AWS, Azure, or Google Cloud

- On-premise within a private datacenter

- In hybrid or edge settings

- Due to the flexibility of Kubernetes, vendor lock-in becomes far less of a concern while enabling multi-cloud approaches.

7. When Kubernetes Might Be Overkill

It’s perfectly reasonable to ask: What’s Kubernetes used for—and when should you avoid it?

With low traffic and a small app, Kubernetes may be too much for your needs. The steep learning curve along with managing a cluster adds unnecessary work for simple scenarios.

In these instances, a better option could be a virtual machine running a Docker container with a reverse proxy.

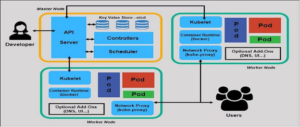

Kubernetes Architecture

Kubernetes centers on the Control Plane, sometimes known as the master node, which keeps the cluster at the requested condition. It comprises the API Server, which acts as the entrance point for all administrative activities and manages communication among users, components, and the cluster.

Config and state data among other things kept in the distributed key-value store known as the etcd component While the Controller Manager monitors nodes, pods, and deployments to guarantee that the actual state of the cluster mirrors the intended state based on resource availability and policies, the Scheduler assigns workloads (pods) to suitable worker nodes.

Where the real application containers run are the Worker Nodes. Every node has a Kubelet, an agent guaranteeing containers are running according to control plane directives. Managing the lifecycle of pods on its node, it continuously contacts with the API server. The Kube Proxy facilitates outside communication with pods within the cluster as well as networking. Worker nodes also have a container runtime, such Docker or containerd, which runs the containers themselves.

Kubernetes employs a number of abstractions to control applications. Containing one or more containers that share resources, pods are the smallest deployable unit. Managing the intended state and lifecycle of applications, deployments facilitate capabilities like rolling updates and rollbacks.

Replica Sets guarantee a certain number of identical pods are constantly running. Services abstract the networking and expose pods to other services or users, hence offering stable endpoints for application access. Namespaces help isolate resources inside the same cluster, therefore allowing for multi-tenant or environment-specific setups.

Essentially built for scalability, reliability, and automation, Kubernetes architecture allows developers and DevOps teams to launch and maintain apps with more speed, adaptability, and consistency across several environments by splitting duties over separate modules and abstracting complex infrastructure management chores.

Kubernetes Use Cases

Application Deployment

Kubernetes simplifies and automates the deployment of containerized applications. Usually using Docker, developers containerize their programs; Kubernetes then guarantees that these containers run in a consistent, repeatable manner throughout several settings. Objects like Deployments and Pods let Kubernetes users define deployment settings in YAML or JSON files.

Kubernetes guarantees that the intended number of pod replicas are active, replaces failed containers automatically, and rolls out updates without downtime. Because it abstracts the underlying infrastructure and offers a dependable foundation for executing any kind of application—whether it be a web app, backend API, or batch processing job this makes it perfect for modern DevOps and cloud-native application approaches.

Scaling Applications

Kubernetes provides strong horizontal and vertical scaling options. Horizontal Pod Autoscaling (HPA) automatically raises or lowers pod count depending on custom metrics or CPU/memory use. This guarantees peak performance during peak loads as well as cost reduction throughout low usage seasons.

If necessary, vertical scaling lets resources (CPU, memory) be changed for pods. Kubernetes also has cluster autoscaling, which enables nodes to be added or withdrawn depending on workload requirements. Companies with changing traffic, including e-commerce sites, streaming platforms, or event-driven services, would particularly benefit from this dynamic scaling.

Managing Microservices

Managing microservices-based architectures is well suited for Kubernetes. In a microservices architecture, every service is created, released independently, and scalable. By isolating services in separate pods, handling service discovery using Services, and enabling communication among them, Kubernetes helps this. Furthermore, it helps to maintain network policies that manage traffic flow and Namespaces to segregate environments (e.g., dev, staging, production).

Tools like Istio or Linkerd can be used to provide sophisticated service mesh features including traffic routing, load balancing, observability, and secure communication between services. By rebooting failed pods and spreading loads across good nodes, Kubernetes guarantees great availability and resilience.

CI/CD Implementation

To automate the process of software distribution, Kubernetes matches well with Continuous Integration and Continuous Deployment (CI/CD) pipelines. Kubernetes lets you specify deployment pipelines that automatically create container images, push them to registries, and distribute fresh versions to the cluster.

Implementing GitOps processes with CI/CD tools such Jenkins, GitLab CI, GitHub Actions, or Argo CD allows application changes in Git repositories to trigger deployments automatically. Should failures arise, Kubernetes makes it simple to roll back; it also enables phased updating (canary deployments, blue-green deployments). This guarantees application stability, lowers human error, and raises deployment speed.

Key Kubernetes Features

- Self-healing: Kubernetes automatically restarts failed containers, replaces and reschedules those that go down, and kills containers that do not respond to user-specified health checks.

- Automated rollouts and rollbacks: Kubernetes can slowly release modifications to your program or its settings and automatically undo them should anything go wrong.

- Service discovery and load balancing: Kubernetes makes containers available either via DNS names or IP addresses and evenly directs traffic among them.

- Horizontal scaling: Using the Horizontal Pod Autoscaler (HPA), Kubernetes can dynamically up or down apps based on CPU, memory, or custom measures.

- Secret and configuration management: Kubernetes lets you keep and control sensitive data like passwords, OAuth tokens, and SSH keys apart from the program code.

- Storage orchestration: Kubernetes can mount containers automatically using local storage, AWS EBS, NFS, or any other selected storage solution.

Kubernetes Service Discovery

In Kubernetes, Service Discovery is the method by which programs inside a cluster locate and interact with one another. Kubernetes gives each Pod a distinct IP when it is generated, but because pods are transitory (can die and be replaced), a consistent way to access services is required.

- ClusterIP: The internal IP of the service in the cluster exposes the service as its default type. Useful for cluster interior communication between services.

- NodePort: Exposes the service on a static port on the IP of each node. Accessed services outside.

- LoadBalancer: Automatically provides an external load balancer, usually with a cloud provider, to send traffic to the services.

- Headless Services: For stateful or custom routing applications, let customers explore pod IPs straight without a load balancer.

Kubernetes Deployment Strategies

- Rolling Update: The usual approach whereby new pods are gradually replaced with old ones. This is perfect for most production environments because it guarantees zero downtime.

- Recreate: Turns off every obsolete pods before generating fresh ones. This is simpler but creates downtime.

- Blue-Green Deployment: blue for old and green for fresh. Traffic is only sent to green upon verification.

- Canary Deployment: Through manual service switching or with instruments like Argo Rollouts, Kubernetes can assist in this.

- Gradual release of the updated version to a small number of users or pods before wider distribution. Good for testing features in manufacture.

- A/B Testing: Much like a canary but routes traffic according to user behavior or labels.

Kubernetes Monitoring Tools

Maintaining Kubernetes application health and performance requires constant monitoring. Important tools used in Kubernetes contexts are:

- Prometheus: A monitoring system gathering data from Kubernetes applications and components under open source. PromQL, a strong query language, works nicely with Grafana.

- Often used with Prometheus, Grafana is a visualization tool for real-time monitoring dashboards and charts.

- Kube-state-metrics offers thorough statistics on the condition of Kubernetes entities like Deployments, Nodes, and Pods.

- Gathers, analyzes, and presents logs from applications and Kubernetes system components with ELK Stack (Elasticsearch, Logstash, Kibana):

- Fluentd is a log collector capable of gathering and sending logs to external systems like Splunk or Elasticsearch.

- Lens: A Kubernetes IDE giving visual perspective into clusters, pod status, logs, and metrics included.

Kubernetes Security Best Practices

Enable RBAC and Use Least Privilege: Define specific responsibilities and permissions using Role-Based Access Control (RBAC). Grant users, services, and applications only the access they truly need. Avoid roles like cluster-admin, which are excessively lenient.

- Use Namespaces for Isolation: Namespace workloads and impose resource quotas and policies to restrict what can be done inside each. This aids in the separation of settings including dev, test, and production.

- NetworkPolicy: Objects to limit which pods can connect among one another. This lowers the possibility of unauthorized service access.

- Secure Secrets: Store secrets in Kubernetes Secrets objects and encrypt them at rest using KMS (Key Management Service). Use tools like Sealed Secrets or HashiCorp Vault for improved secret administration.

- Use Pod Security Standards (PSS): To avoid risky behaviors like privilege escalation, root user access, or host path access, use Pod Security Admission (PSA) to enforce security measures at the pod level.

- Scan Container Images:Detect weaknesses in container images before deployment using image scanning tools such Trivy, Clair, or Anchore. Always use minimal, official, and certified base images.

- Enable Audit Logging: Tracking API requests requires Kubernetes audit logging. This aids in identifying aberrant behavior and backs up incident response initiatives.

- Restrict External Access: Reduce the exposure of services employing firewalls and Ingress controllers. Unless required, stay clear of public internet exposure of sensitive services.

- Keep Kubernetes and Dependencies Up-to-Date: Maintain system integrity and regularly patch known vulnerabilities by updating the Kubernetes cluster, container runtimes, and tools.

- Use TLS and Authentication: Make sure all internal and external communication is encrypted utilizing TLS. Access the cluster via robust authentication techniques (certificates, OIDC, etc.).

Why Choose Cloudlaya?

Cloudlaya partners with everyone from plucky startups to Fortune 500 firms. We help you spin up cloud-native systems that run like clockwork on Kubernetes.Our DevOps pros live and breathe hands-on work:- Wheeling out full-stack Kubernetes clusters and keeping them tidy.

- Plugging continuous-integration pipelines so code moves fast.

- Crafting microservices blueprints that actually talk to each other.

- Locking down security gaps before they pop up.

- Watching every metric in real time and firing alerts when things wobble.

- Smoothly sliding applications from on-prem servers to public clouds.Launch day is just the start for us; we stick around to help you scale, tighten security, and shave off unnecessary

- bites.Stop Drowning in Cloud Bills

- Nice cluster, but wow-the bill can bite if you blink. CostQ.ai tames that surprise by shining a light on where your budget slips away.This in-house tool scans your Kubernetes playground for idle goodies no one is touching. Think sleepy pods or abandoned volumes collecting dust.

- It serves up live dollar-by-dollar graphs sorted by namespace or service.

- Bottom line: unused bits stand out, and you get a push to resize or shut them.

- AWS, Azure, GCP? CostQ.ai plugs into all three, so multi-cloud pricing looks like one neat report.Bring in CostQ.ai, and your spending stops running blind. Performance stays solid; waste leaves the party.

FAQs Related to “What is kubernetes used for “

1. What is Kubernetes best for?

For automatic deployment, scaling, and control of containerized programs, Kubernetes is ideally suitable. It is great at orchestrating microservices, managing large-scale applications, assuring high availability, and facilitating continuous delivery across development, staging, and production environments.

2. Is Kubernetes cloud or DevOps?

Usually employed in cloud settings, Kubernetes is a DevOps tool. Though not confined to the cloud you may execute Kubernetes on-premises, on public clouds (AWS, GCP, Azure),or in hybrid configurationsit forms part of cloudnative architecture. By supporting automation, scalability, and CI/CD processes, it connects both cloud architecture and DevOps techniques.

3. Who owns Kubernetes?

Though first created by Google, Kubernetes is now maintained by the Cloud Native Computing Foundation (CNCF), a division of the Linux Foundation. Open-source project, many businesses and developers all over contribute.

4. Is Kubernetes free or paid?

Lightweight, portable units called containers include everything an application requires code, runtime, libraries, and dependencies therefore Docker packages applications. By guaranteeing uniformity across environments, Docker streamlines app development, testing, and deployment.

5. Is Kubernetes an API?

Kubernetes is not just an API, but the Kubernetes API is a critical component. It serves as the main interface for managing cluster resources. All operations deploying applications, scaling, updating, etc. are done by interacting with the Kubernetes API.

6. Does NASA use Kubernetes?

Yes, NASA and other groups have used Kubernetes to handle scientific workloads. NASA’s Jet Propulsion Laboratory (JPL), for instance, has employed Kubernetes to analyze satellite data and help research with machine learning models.

7. Is Kubernetes easy to learn?

Particularly for novices, Kubernetes has a steep learning curve. It requires knowledge of YAML configurations, networking, storage, and DevOps ideas as well as containerization. With regular practice and good instruction, though, it becomes doable.

8. What problems does Kubernetes solve?

Kubernetes solves several problems:

- Automates container orchestration

- Manages scaling and load balancing

- Handles self-healing (restarting failed apps)

- Enables CI/CD workflows

- Provides service discovery, rolling updates, and resource management

It greatly reduces the operational complexity of running distributed, containerized applications.

9. Does Kubernetes have a GUI?

Yes, Kubernetes has graphical interfaces like:

- Dashboard (official GUI to view and manage resources)

- Lens (popular open-source Kubernetes IDE)

- Rancher, Octant, and other third-party tools

These make cluster management more user-friendly compared to CLI-only interaction.

10. Does Kubernetes use Python?

Mostly created in Go, not Python, Kubernetes is But Python can interact with the Kubernetes API utilizing client libraries such kubernetes-client (Python SDK), which is helpful for automation and bespoke controllers.

11. Who needs Kubernetes?

Kubernetes is useful for:

- DevOps engineers managing complex deployments

- Companies with microservices-based architectures

- Teams using CI/CD pipelines

- Startups to enterprises needing scalability, high availability, and automation

If you’re deploying and maintaining containerized applications at scale, Kubernetes is highly beneficial.

12. What are the disadvantages of Kubernetes?

Some disadvantages include:

- Complexity: Steep learning curve and operational overhead

- Resource-intensive: Requires significant computing resources

- Overkill for small projects: Too complex for simple or monolithic apps

- Troubleshooting can be hard: Debugging issues in a distributed system is not always easy

- Initial setup and configuration can be time-consuming

Read These If You Find Them Useful.

7 Powerful AWS NOVA Gen AI Tools That Make AI Work For You (No Coding Required!)

AWS PartyRock: Showcasing the Creativity of Artificial Intelligence

Delivering High-Performance OTT Streaming with Amazon CloudFront